As a programmer, I wanted to learn how to develop a chatbot by creating my own. I focused on the following components of developing a bot: the Natural Language Understanding (NLU) component, the Dialog Manager (DM), the Natural Language Generation (NLG) component, and the Modularized, or end-to-end, approach.

As a programmer, I wanted to learn how to develop a chatbot by creating my own. I focused on the following components of developing a bot: the Natural Language Understanding (NLU) component, the Dialog Manager (DM), the Natural Language Generation (NLG) component, and the Modularized, or end-to-end, approach.

To build a successful chatbot, consider the user's point of view. First, the user writes something to the bot. The bot processes the message and generates a response. Then, the response is sent back to the user.

Those steps are repeated indefinitely until one of the interlocutors decides to finish it. Let’s dig a little bit deeper into the conversational process described above, and let’s analyze them with a little bit more detail to understand its actual complexity.

In this post, I will show you the elements involved in making a chatbot, with details on what each step involves. Chatbots are used in many contexts and in business, and knowing how to implement one can be important for your career as a developer.

The Elements Involved in Building a Chatbot

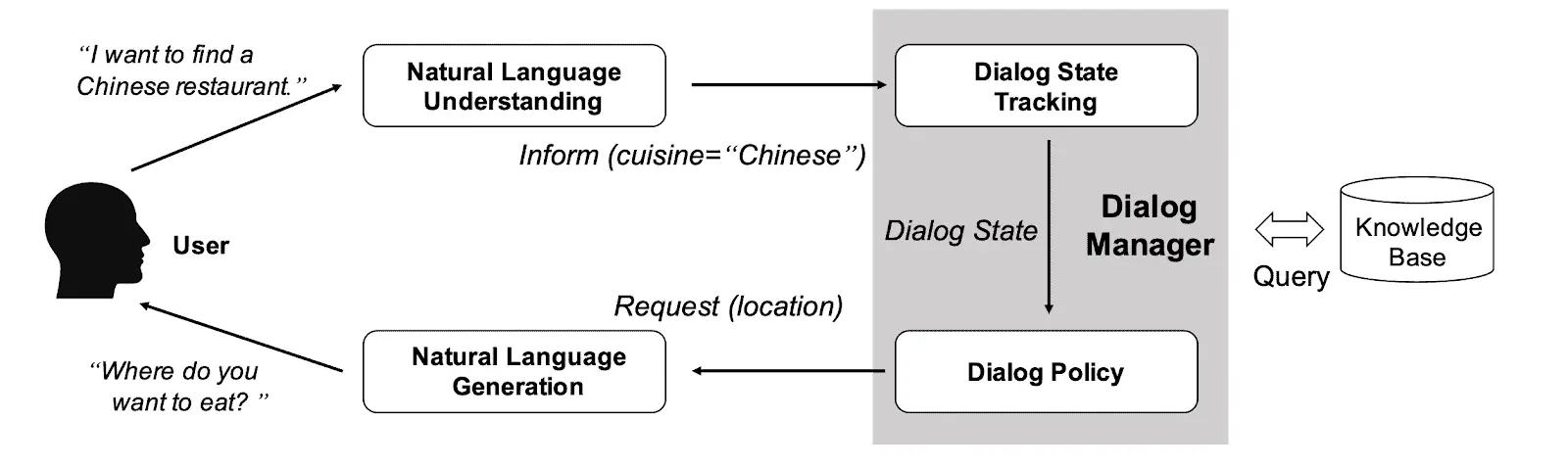

When the user writes something, the bot needs to process it. Since the bot doesn’t understand human language, we need a way to translate from our natural and unstructured language to any structured data that can be processed and understood by the machine.

This step is called Natural Language Understanding (NLU). The translation from the user message to the structured data is called “semantic frame.”

Once the user message has been processed and a semantic frame has been produced, the bot is ready to analyze this structured data to look for the best next actions to take, including generating a response.

In this step, the bot probably needs to remember data from the conversation, so it doesn’t ask the same questions once and again. This process is carried out by a component called the Dialog Manager (DM), which keeps track of the conversation state and chooses an action regarding a specific policy.

Finally, the bot-selected action includes a response for the user or the data for this response to be generated. This data is passed to a component that processes and translates it into a human-language response that is shown to the user. This process is known as Natural Language Generation (NLG).

These are the elements involved in the process of building a bot. Let’s talk about them individually.

The NLU Component

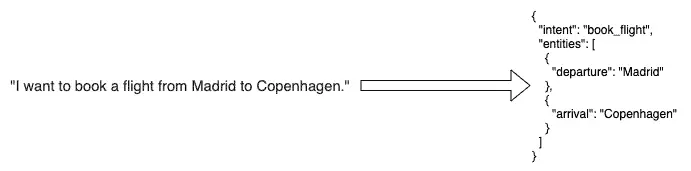

As explained earlier, the NLU component produces a semantic frame out of a message written in natural language. This frame is typically composed of an intent, which represents the user’s intention with the sent message, and a set of entities that represent data the user is giving within the message.

For example, “I want to book a flight from Madrid to Copenhagen” could be translated to a structure like the one in the following diagram:

To accomplish this translation, one can use classical Natural Language Processing (NLP) methods or more advanced methods using Deep Learning (DL).

Normally, the classical methods are used when there is little to no data, since DL methods require a bunch of data to perform well. However, in this case, with the advent of pre-trained models, one can choose the DL approach and fine-tune the models with custom data even if there is little of it available.

The Dialog Manager

This component is the most critical because the success or failure of a conversation relies heavily on its shoulders. Of course, all the pieces implied in the processing of a conversation are very important. Nonetheless, the continuity — the fluency of the dialog — depends strongly on how good this component performs in both memory (via filled slots) and action selection.

That’s why this piece is generally composed of two subcomponents:

- The dialog state tracker (DST)

- The dialog policy (DP)

The DST keeps track of the conversation history, the slots, and all the info related to the context of the conversation. The mentioned slots are memory units filled with entities received via the semantic frame, database calls, webhook responses, and others.

The DP is the subcomponent in charge of choosing the next action, based on the semantic frame, conversation history, or selected actions confidence.

Let’s go through an example to understand the complexity this component has to deal with:

(1) User: “I’d like to withdraw $50 from my bank account.”

(2) Bot: “Which kind of account: current or credit?”

(3) User: “How much money do I have on my credit account?”

(4) Bot: “You have a balance of $100 in your credit account.”

(5) User: “Great! I’ll take it from that one then.”

(6) Bot: “OK, so you want to withdraw $50 from your credit account. Is that right?”

(7) User: “Yes, please.”

….

In (1) the NLU component, the inferred intent could have been “withdraw_money” with the entity “amount” filled with $50. The bot already knows the user, let’s say because it is a logged-in user, and fetches info from the DB, getting two accounts “current” and “credit,” which fills the slot “available_accounts.”

The DP decides that the bot needs to know from which account the user wants to withdraw, so the next action is to ask the user in (2) from which of the available accounts should the money be withdrawn. In (3) the user changes its goal and now wants to know the balance in their credit account.

The DM must be smart enough to continue with the conversation after the goal change but keeping the info it has already memorized via slots. In (5) the user gets back to the initial goal, referencing past data without specifying it explicitly.

The bot must relate this info, and in (6) the bot grounds or asks to confirm the collected data before executing the required action.

As you can see, it’s not an easy task; this process is under substantial study by academics and companies. Depending on the target, one can choose a more straightforward approach or a more sophisticated one.

The easiest practical approach and the least flexible one is to model the dialog as a Finite State Machine (FSM), in which each State represents action, and the Edges are intents. The slots can be saved in a key/value data structure.

A more flexible approach would be combining FSM with a Frame-based system like the one described by GUS, A Frame-Driven Dialog System in 1977.

A more sophisticated approach — but at the same time more complex — would be to model the dialog as a classification problem for ML, where the history of the dialog, past chosen actions, and the slots are features, and the actions are the classes to be predicted.

The NLG Component

While NLP turns human language into unstructured data and NLU understands data through grammar, NLG generates a text from structured data.

The NLG can be compared to an analyst who interprets and transforms data into words, sentences, and paragraphs. The primary benefit of this component is that it creates a contextualized narrative, typing the meaning hidden in the data and communicating it.

Once the bot knows what to do next, a response should be generated to the user so they can get some kind of feedback. After the user receives the feedback, they can decide whether they have sufficient information and can finish the interaction, or ask further questions.

Here are two main solutions: The easy one is predefining the responses in templates, with placeholders to replace them with actual data when generating the response. The other solution, the dynamic sentence generation, is a little bit more complicated, using Deep Learning to generate the response automatically after injecting some data. It can be used for creation of complex template systems.

The example of a predefined response solution can look as follows. This kind of template can be used for the straightforward translation of data into text.

“I have found plane tickets from {{from_city}} to {{to_city}}.” The placeholders are {{from_city}} and {{to_city}}, which are replaced by actual data collected during the conversation, giving a response like this: “I have found plane tickets from Madrid to Copenhagen.”

Who knows, maybe GPT-3 gives you the answer for this component!

The Modularized Approach or the End-To-End Approach

So far in this article, I have exposed three modules or components of building a chatbot: NLU, DM, and NLG. However, there is a different approach under active study, which implies that only one component receives the user message and generates the response.

The user can say: “I'd like to fly to Copenhagen” => E2E black box that generates the response => There are flights on Monday and Tuesday.

This is the end-to-end approach, and it’s quite interesting, but for now it lies only in the academic area. However, it looks very promising!

The main benefit of this approach is that it allows you to control the NLP process in each module, train it, and improve it separately. Another benefit is the single responsibility principle in which each module is responsible for one thing and one thing only, which is convenient for code maintenance.

Choose the Right Approach for Your Project

If you are researching the possibilities offered by ML, you can opt for the modular approach with each module making use of different ML techniques, or you can use the end-to-end approach. Another option is to combine them — for example, implementing an ML-based NLU module together with a Frame-based dialog manager and a template-based NLG.

There are many possibilities and the limit is our imagination. I encourage those interested in building a bot to dig deeper into the different options discussed in this article to discover which is the right choice for your project.